Modern machine learning often assumes a simple rule: if you want a model to recognise something, you must show it many labelled examples. Zero-shot learning challenges that assumption. It describes a model’s ability to classify objects or complete tasks it has not explicitly seen during training, by using prior knowledge and meaningful descriptions. This idea is increasingly practical in real projects, which is why it is discussed in hands-on programmes such as a data science course in Chennai where learners explore how models generalise beyond curated datasets.

Understanding What Zero-Shot Learning Really Means

Zero-shot learning (ZSL) is not “guessing.” It is a structured form of generalisation. The model learns relationships during training—between words, concepts, and features—and then applies those relationships to new categories or tasks later.

A useful way to think about ZSL is this: instead of learning a category only from labelled examples, the model learns a bridge between inputs and meaning. That bridge can be built using:

- Semantic descriptions (text definitions of classes)

- Attribute vectors (human-defined properties like “has stripes”, “four legs”)

- Embeddings (numeric representations of concepts, images, and words)

- Natural-language instructions (prompts that describe the task)

When the model is asked to label a new class, it uses the description or embedding of that class to decide where the input best fits. This is why zero-shot methods are common in large language models and modern multimodal models.

How Zero-Shot Learning Works in Practice

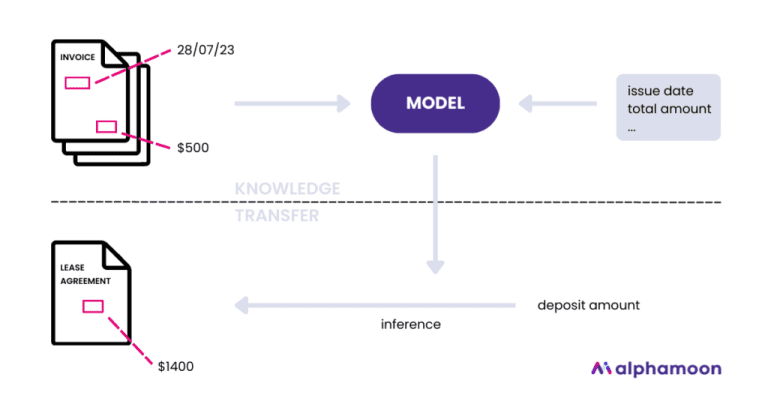

Learning a shared representation space

Many ZSL systems map both the input and the label description into a shared space. For example, an image is converted into a vector representation, and so is each candidate label (based on a text description). The label whose vector is most similar to the image vector becomes the prediction.

This approach reduces dependence on labelled examples for every new class, because the model can “understand” the label through language or attributes.

Prompting and instruction following

In language models, zero-shot learning often appears as instruction following. You provide a task description—“Classify this review as positive, negative, or neutral”—and the model performs the task without being trained specifically on your dataset.

A key detail is prompt clarity. The model’s performance depends on how well the prompt defines:

- the expected labels,

- the decision rules,

- and the output format.

This is a practical skill: the better your task description, the more reliable your zero-shot output becomes.

Contrastive learning for text and images

For multimodal systems, contrastive learning is a common foundation. During training, the model learns to align related text and images and separate unrelated pairs. Later, at inference time, you can do zero-shot classification by comparing an image against multiple text labels.

This is why models can sometimes recognise niche categories from their names or descriptions, even when those exact labels were not part of a typical supervised training set.

Where Zero-Shot Learning Adds Real Value

Rapid prototyping for new categories

In many organisations, you cannot wait weeks to collect labels for every new category. Zero-shot methods help teams test ideas quickly:

- triaging support tickets into emerging issue types,

- tagging new product feedback themes,

- or classifying documents by policy topics.

This is often introduced early in practical learning paths, including a data science course in Chennai, because it reflects what teams do when data collection is expensive or slow.

Handling long-tail and rare classes

Supervised models struggle when some classes have very few examples. Zero-shot approaches can provide reasonable starting performance on rare categories by relying on semantic descriptions rather than example volume.

Personalisation without full retraining

Zero-shot prompting can adapt a general model to a specific business context without building a separate classifier for every variation. For instance, you can instruct the model to categorise sales calls using your internal taxonomy, provided you describe the taxonomy clearly.

Limitations and How to Use Zero-Shot Responsibly

Zero-shot learning is powerful, but it is not a replacement for validation.

Accuracy can vary by label wording

Because zero-shot relies heavily on semantic similarity, changing label names or descriptions can change outcomes. “Fraud” vs “suspicious transaction” may lead to different classifications. This makes careful label design important.

Bias and ambiguity are real risks

If the model’s training data contained skewed associations, zero-shot outputs can reflect those biases. Ambiguous tasks (for example, “Is this message urgent?”) can also produce inconsistent results unless you define clear criteria.

Use it as a baseline, then improve

A sensible workflow is:

- Start with zero-shot to establish a baseline and understand label separability.

- Collect a small labelled set for the hardest cases.

- Move to few-shot learning or fine-tuning when the business impact demands higher reliability.

This “baseline to improvement” approach is frequently emphasised in a data science course in Chennai because it mirrors real deployment decisions: start fast, then add rigour.

Conclusion

Zero-shot learning enables models to perform tasks and recognise categories they were not explicitly trained on, using semantic knowledge, shared representations, and well-defined instructions. It is especially useful for rapid prototyping, rare classes, and flexible classification workflows. However, it must be tested carefully, because performance can shift with label phrasing and task ambiguity. When applied with clear prompts and sensible evaluation, zero-shot learning becomes a practical tool—not a shortcut—and it fits naturally into modern development practices taught in a data science course in Chennai.